Abstract

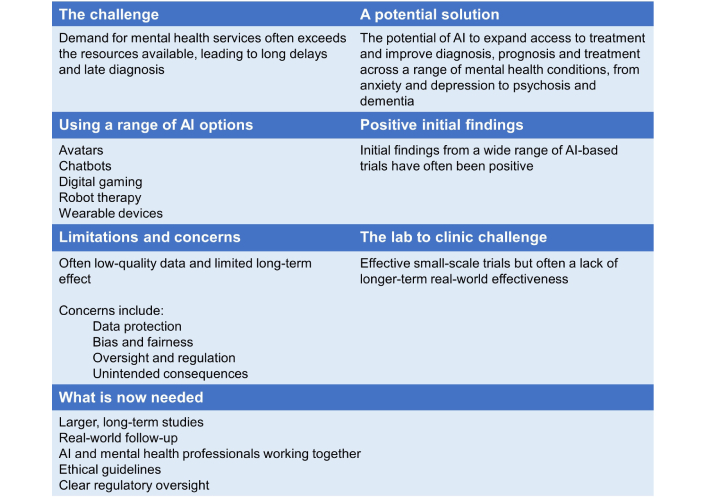

Mental healthcare in a range of countries faces challenges, including rapidly increasing demand at a time of restricted access to services, insufficient mental healthcare professionals and limited funding. This can result in long delays and late diagnosis. The use of artificial intelligence (AI) technology to help to address these shortcomings is therefore being explored in a range of countries, including the UK. The recent increase in reported studies provides an opportunity to review the potential, benefits and drawbacks of this technology. Studies have included AI-based chatbots for patients with depression and anxiety symptoms; AI-facilitated approaches, including virtual reality applications in anxiety disorders; avatar therapy for patients with psychosis; AI humanoid robot-enhanced therapy, for both children and the isolated elderly in care settings; AI animal-like robots to help patients with dementia; and digital game interventions for young people with mental health conditions. Overall, the studies showed positive effects and none reported any adverse side effects. However, the quality of the data was low, mainly due to a lack of studies, a high risk of bias and the heterogeneity of the studies. Importantly also, longer-term effects were often not evident. This suggests that translating small-scale, short-term trials into effective large-scale, longer-term real-world applications may be a particular challenge. While the use of AI in mental healthcare appears to have potential, its use also raises important ethical and privacy concerns, potential risk of bias, and the risk of unintended consequences such as over-diagnosis or unnecessary treatment of normal emotional experiences. More robust, longer-term research with larger patient populations, and clear regulatory frameworks and ethical guidelines to ensure that patients’ rights, privacy and well-being are protected, are therefore needed.

Keywords

Mental health, artificial intelligence, depression, anxiety, benefits, drawbacksIntroduction

Mental healthcare in the UK faces challenges including rapidly increasing demand at a time of restricted access to services, insufficient mental healthcare professionals and limited funding. This was highlighted in Lord Darzi’s recent review of the UK’s National Health Service (NHS) for the government, which reported a surge in mental health needs and the normalisation of long waits for support [1]. The increase is most striking for children and young people, having risen by 11.7% per year from around 40,000 a month in 2016 to almost 120,000 a month in 2024—with 109,000 children and young people waiting for more than a year for first contact with mental health services following referral. The situation in the UK is not unique. A 2023 report from the European Parliamentary Research Service observed ‘While some EU countries have well-established mental health systems, others face challenges in terms of availability, affordability, and quality of services.’ [2].

The use of artificial intelligence (AI) technology to help to address these shortcomings is therefore increasingly being explored, in particular the potential to screen, diagnose and treat mental illnesses [3]. For example, research for the UK Parliament, published in 2025, identified that trials and use of AI are ongoing across the UK and internationally, with important distinctions between: AI tools built for mental healthcare in the NHS that are subject to standards and regulations; consumer products for well-being with less regulatory oversight; and AI tools not intended for mental health but used by people with mental health challenges [4]. Examples in the UK itself range from ongoing research, such as the Artificial Intelligence in Mental Health (AIM) lab at King’s College, London, to operational models such as Oxford VR’s social engagement program, which is available on the NHS via Improving Access to Psychological Therapies (IAPT) and applies cognitive behavioural therapy (CBT) techniques in an immersive virtual reality setting [5, 6]. The approach is one that a 2018 study reported in The Lancet, described as having the potential to greatly increase treatment provision for mental health disorders [7].

An umbrella review of previous literature reviews revealed a potential use of AI models in the diagnosis of mental disorders such as Alzheimer’s disease, schizophrenia and bipolar disease, with performance ranging from 21% to 100% [8]. An AI-based approach may also help to pick up early signs of anxiety/depression and promote early diagnosis of these conditions. Wearable devices integrated with AI, such as smart watches, smart glasses and, more commonly in research studies, an intelligent band, are often used for diagnosis and screening of anxiety and depression via their ability to continuously record data and track real-time changes. Pre-screening evaluation can also be done via these devices, and individuals notified of their need for a mental health check-up [9]. In principle, this could be extended to include the promotion of mental health and well-being, although there is less evidence to support this.

The effectiveness of the treatment of mental health conditions using AI-based interventions, in particular anxiety and depression, has been more widely investigated, with generally promising results. AI has been employed in a variety of ways in these studies, with the use of conversational agents (chatbots), a computer programme designed to stimulate conversation (written or spoken), being more commonly reported [10]. Other approaches include avatar therapy, developed to provide therapeutic conversations with its users, and AI-enabled robots, which are being explored to reduce stress and loneliness and improve mood, or help with social skills and facial recognition [10]. AI has also played a role in digital gaming interventions. Psycho-education programmes can be delivered as games which are easily accessible via smartphones and can improve their user’s mental health [9].

The need for increased mental health support is clear and pressing, but the human and financial resources to respond remain limited. This suggests the value of exploring the potential of AI to assist in the treatment of mental health conditions. The recent increase in reported studies in this field provides an opportunity to briefly review the potential use, benefits and drawbacks of AI-based treatment, as set out here. This is also an opportunity to help fill the current research gap, due to the lack of long-term efficacy studies, the need for more robust evidence, and the limited exploration of ethical and legal challenges in existing reviews—including the challenge of real-world implementation (moving from ‘the lab’ to ‘the clinic’) as well as ethical and legal issues ranging from potentially biased algorithms to data protection.

AI-based chatbots to treat anxiety and depression

Robust evidence for the efficacy of AI-based chatbots in alleviating depressive and anxiety symptoms in short-course treatments has been reported in a 2024 pooled data analysis of 18 randomised controlled trials (RCTs), involving 3,477 participants [11]. RCTs are considered the “gold standard” for research trials as participants are randomly assigned to two (or more) groups to test a treatment, to ensure a fair comparison. The findings showed significant improvements in depression and anxiety symptoms, which were most evident after eight weeks of treatment. However, these effects were not evident at the three-month follow-up. Certain characteristics associated with AI-based chatbots were reported to increase their beneficial effects on depression and on psychological distress [12]. These included: the use of multimodal or voice-based agents; generative AI (new responses) rather than retrieval-based AI (pre-written responses); integration with mobile/instant messaging apps; targeting clinical or subclinical and middle-aged/older populations.

Reasons for longer-term effects of chatbots not being evident were not suggested in the pooled data analysis. However, some of the characteristics of chatbots compared with a therapeutic relationship with human practitioners may be relevant here—suggesting a short-term transactional approach rather than the building of a longer-term therapeutic relationship. For example, a 2024 article in Psychology Today observed, ‘Research shows that the therapeutic relationship, rather than any specific modality used, is the biggest predictor of success in treatment (Ardito & Rabellino, 2011). Therapy is not just about providing solutions but also about fostering a supportive and empathetic relationship, which, at least at this time, AI may struggle to deliver.’ [13]. Along similar lines, a 2024 Exploratory Investigation of Chatbot Applications in Anxiety Management, noted that limitations persist, such as the lack of human empathy and technical challenges in understanding complex human emotions [14].

Virtual reality applications for anxiety disorders and psychosis

A study of the impact of virtual reality applications (a computer-generated environment) on phobias, social anxiety disorder, agoraphobia, and panic disorder, using head-mounted displays, showed beneficial effects. Significantly lower anxiety symptoms were demonstrated when virtual reality applications were compared to waiting-list or psycho-education-only control groups, suggesting their potential use in the treatment of anxiety disorders [15]. However, there was significant heterogeneity of the effect sizes due to the variability in study and patient characteristics between studies.

Avatars, computer-generated representations of an individual in a virtual reality environment, have also been used as a therapy for distressing voices experienced by patients with psychosis with some success [16]. In a randomised phase 2/3 trial of 345 participants, voice-related distress and overall voice severity significantly improved at 16 weeks, compared with treatment as usual. These improvements were maintained at 28 weeks but were no longer statistically significant. Voice frequency was also reduced after 16 weeks of an extended version of the avatar therapy. These findings are promising, particularly as existing treatments for distressing voices are currently suboptimal and new treatments are needed. There is also evidence that avatar therapy can have an impact on the quality of life of patients with psychosis. A qualitative study using semi-guided interviews of treatment-resistant schizophrenia revealed that patients presented with fewer psychotic symptoms, better self-esteem, more hobbies and projects, and an overall improved lifestyle and mood following avatar therapy [17].

AI robot therapy

AI humanoid robots are being increasingly used for the treatment of children with autism spectrum disorder (ASD). Two of the most widely used are Nao (which is designed to improve facial recognition and appropriate gaze response) and KASPAR (which is designed to improve social skills) [18]. Interestingly, initial studies suggest that children with ASD show greater improvements in social, cognitive and emotional skills in response to robotic intervention than conventional human therapy [19]. At the other end of the age spectrum, Pepper (a humanoid robot) has been used in elderly care settings, including nursing homes, to provide companionship, and help to reduce feelings of loneliness and isolation, as well as other more practical applications such as reminding patients to take medications [18].

Intelligent animal-like robots such as Paro, a lifelike fluffy harp seal designed by a Japanese engineer in 2004, are increasingly being used to help patients with dementia. A 2024 pooled analysis of 12 studies involving 1,461 participants showed that Paro had a moderate effect on medication use, and a small effect on anxiety, agitation, and depression [20]. Qualitative data from the studies suggested that Paro reduced apathy and increased sociability. However, the overall quality of evidence for all outcomes was graded as low due to methodological limitations, small sample size and wide confidence intervals.

The effects of interventions mediated by automated conversational agents (including chatbots, agents with virtual representation, and robots with a physical representation) on the emotional component of the mental health of young people have also been recently reviewed [21]. Overall, the results showed that these interventions were acceptable, engaging and with high usability for emotional problems. However, the results for clinical efficacy were far less conclusive, with almost half of the evaluation studies reporting no significant effect on emotional and mental health outcomes. Furthermore, the findings of a 2019 pooled analysis of 50 studies of self-help/guided self-help interventions suggested that at least minimal support from a therapist may be needed for self-guided, digital interventions to be efficient in young people [22].

AI digital gaming interventions

There is a growing body of research exploring the advantages of the use of digital game interventions for improving attention span and memory, managing emotions, promoting behaviour change and supporting treatment for mental illness. This approach is particularly relevant to young people, for whom the use of technologies to receive information and mental health support is appealing. Digital gaming interventions have been utilized in conditions such as ASD, attention deficit disorders, schizophrenia, depression, and anxiety disorders. The findings of a scoping review of 49 studies testing 32 digital video games in young people who were undiagnosed or who were at risk of, or had mild to moderate (nearly half of the studies), or severe mental conditions supported the potential integration of digital games for mental health treatment or prevention [23]. Digital game interventions were effective, user satisfaction and acceptability were good, and there were relatively high programme retention rates reported in the studies.

One potential advantage of a digital gaming approach for young people is that it takes advantage of their natural desire to play. Play has the power to engage children and young people, creating dispositions for intrinsic learning which are self-motivating and inherently rewarding [24]. This may help explain why the demographic with the greatest proportion of players taking part in online gaming was aged 16–24 (with no data available for under 16s from this source)—again suggesting the advantage of a digital gaming approach for young people [25].

However, a 2024 review of reviews concluded that, while digital games for mental health are promising, in order to be consistently effective, they need to be co-created with young people, immersive, personalized and pinpoint a specific disease like depression or anxiety, rather than mental health in general [26]. Perhaps counterintuitively, a 2024 meta-analysis suggested some (albeit limited) evidence that digital interventions might be more effective for the elderly [27].

As regards digital gaming interventions generally, there may be an increased risk of gaming addiction, with growing concerns regarding internet gaming disorder (particularly for males and those with impulsivity as a personality characteristic), as well as over-reliance on technology [28]. It may also be the case that digital interventions are more likely to be pursued by young men, rather than young women, given female concerns regarding cybersexism [29].

Concerns around the use of AI in mental health care

AI-based mental healthcare raises important ethical and privacy concerns [30] including:

Data privacy and security: Whilst data privacy and security are important for all health-related data, the sensitive nature of data relating to a patient’s mental health means this is particularly important.

Informed consent: The sensitive nature of mental health information also emphasises the importance of patients being aware of, understanding, and agreeing on how their data will be used.

Transparency: AI algorithms are a relatively recent development and how they have been constructed may not be clear to either patients or physicians; transparency is therefore needed to ensure confidence, trust and accountability.

Bias and fairness: Any biases in the data on which AI algorithms are based (for example in relation to gender, race or age) could lead to potential disparities in diagnosis and treatment recommendations, and a lack of understanding about cultural differences [31].

Oversight and regulation: AI systems may operate with unchecked biases or inaccuracies, which run the risk of leading to harmful recommendations to those who need support. Hence the need for oversight and regulation.

Human oversight: This is an important safeguard, to reduce the risk of error and ensure ethical principles are maintained.

Accountability and liability: To determine responsibility in cases of adverse outcomes caused by AI recommendations.

Patient-provider relationships: Where patient-provider relationships are potentially affected.

Unintended consequences: These could include inadvertent reinforcement of stigmatisation, over-diagnosis or unnecessary medical treatment of normal emotional experiences.

The challenge of real-world implementation

The studies reported in peer-reviewed journals have typically taken place in a short-term ‘lab’ setting rather than in prolonged ‘real-world’ use. As the Oxford Internet Institute notes, “it is important to remember that building an accurate or high-performing AI model and writing about it in an academic publication is not the same as building an AI model that is ready for deployment in a clinical system. Moving from ‘the lab’ to ‘the clinic’ is a key part of the transition and yet very few AI models have successfully made the leap across this ‘chasm’” [32]. This may explain why studies sometimes show short-term effectiveness during a research trial but a lack of evidence of clinically significant effectiveness in longer-term follow-up.

Conclusions

The use of AI in mental healthcare has the potential to offer many benefits, in particular in countries with over-stretched mental health services—from improving initial diagnosis through to expanded access to mental health support and personalized treatment. However, more robust long-term studies with larger patient populations are needed to investigate whether chatbots, and other AI-based agents, can demonstrate long-term efficacy and become valuable additional tools in the treatment of people with mental health conditions.

However, as identified, its use also raises important ethical and privacy concerns. This highlights the need for clear regulatory frameworks and ethical guidelines to protect patients’ rights, privacy, and well-being. The development of ethical guidelines for research (including an ethics of care approach) is therefore important, as is the integration of AI with human oversight [33].

Abbreviations

| AI: | artificial intelligence |

| ASD: | autism spectrum disorder |

| NHS: | National Health Service |

Declarations

Author contributions

BB: Investigation, Writing—original draft, Writing—review & editing. MB: Conceptualization, Investigation, Writing—review & editing. Both authors read and approved the submitted version.

Conflicts of interest

The authors declare that they have no conflicts of interest.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent to publication

Not applicable.

Availability of data and materials

Not applicable.

Funding

Not applicable.

Copyright

© The Author(s) 2025.

Publisher’s note

Open Exploration maintains a neutral stance on jurisdictional claims in published institutional affiliations and maps. All opinions expressed in this article are the personal views of the author(s) and do not represent the stance of the editorial team or the publisher.